Artificial Intelligence (AI) has moved from a futuristic concept to a practical tool in clinical research and healthcare. From accelerating patient recruitment to assisting in real-time data analysis, AI has become the backbone of many cutting-edge advances. Life sciences organizations are deeply committed to unwavering patient safety, robust data protection, comprehensive data privacy, and understand that innovation must align with the highest standards of rigorous regulations. To address these challenges, forward-thinking companies are adopting a tiered approach to AI risk management—bringing together possibility and protection in equal measure.

Why AI Matters in Life Sciences Now

Clinical trials generate colossal amounts of data—from patient surveys and lab results to imaging data and electronic medical records. AI can uncover insights that might go unnoticed by traditional analysis and streamline processes that often require hours of expert labor. By selectively automating manual tasks and surfacing patterns, teams can focus more on patient care, advanced analytics, and strategic decision-making. With these benefits, it’s easy to see why AI has become essential to any visionary life sciences organization.

However, this sector is unlike typical consumer or enterprise industries. It is heavily regulated to protect patient welfare, ensure data integrity, and uphold strict privacy requirements. Breaches of personal health information can result in more than reputational damage; they can significantly affect individuals whose data is compromised and trigger a cascade of serious consequences, spanning legal, financial, operational, and ethical dimensions. For these reasons, adopting AI responsibly isn’t just nice to have—it’s an absolute must.

The Challenge: Balancing Innovation and Compliance

Adopting AI tools in a regulated environment is a balancing act. On one hand, there’s an urgent need for AI innovation to reduce costs, speed up clinical trials, and get life-saving therapies to market faster. On the other hand, every new technology introduces potential risks. These can include data exposure, unverified algorithms, supplier vulnerabilities, and misaligned processes that fall short of regulatory standards.

How does a life sciences leader navigate these complexities? At Castor, we have landed on a tiered system. By categorizing AI use cases into distinct risk levels, we define which safeguards, validations, and data protections are required in each scenario.

Introducing Castor’s Three-Tier Framework

Castor has adopted a tiered system to consider AI usage. This is well aligned with the EU AI Act and is a risk based approach for using A. Here we want to share it with your for feedback and hopefully as a helpful foundation for your organization:

- Tier 1 – Low-Risk AI Utilization:

- Involves no personal (health) information and no customer-specific information.

- Used for experiments, proof-of-concept projects, or internal brainstorming.

- Requires baseline security certifications (e.g., ISO 27001 or SOC 2) and ensures that data isn’t retained or used to train third-party models.

- Tier 2 – Medium-Risk AI Utilization:

- May include confidential business data or personal information (like a study protocol), but no personal health information.

- Not patient-facing; primarily used for internal or sponsor-facing tasks (e.g., assisting with the creation of protocols or generating test cases).

- Involves supplier vetting, robust security measures, and firm guarantees that data isn’t stored long-term in the language model.

- Tier 3 – High-Risk AI Utilization:

- Involves personal patient health information, potentially including sensitive healthcare data.

- A failure here could compromise clinical trial integrity or patient safety.

- Often requires region-specific data storage, stringent security certifications, and thorough validation (IQ/OQ, audit documentation, and strict data privacy protocols).

Key Considerations for Life Science Leaders

- Data Residency and Privacy: Sensitive data must stay in approved regions to satisfy regulations such as GDPR, HIPAA, or national laws.

- Validated Suppliers: Not all AI vendors offer the same depth of compliance. Look for certifications and recognized quality frameworks.

- Security Controls: Role-based access control (RBAC), single sign-on (SSO), and encryption-in-transit and at-rest are non-negotiables.

- Use-Case Alignment: Not all AI needs the highest scrutiny—choose the safeguards that match the stakes.

Real-World Applications: From Simple Tools to Complex Co-Pilots

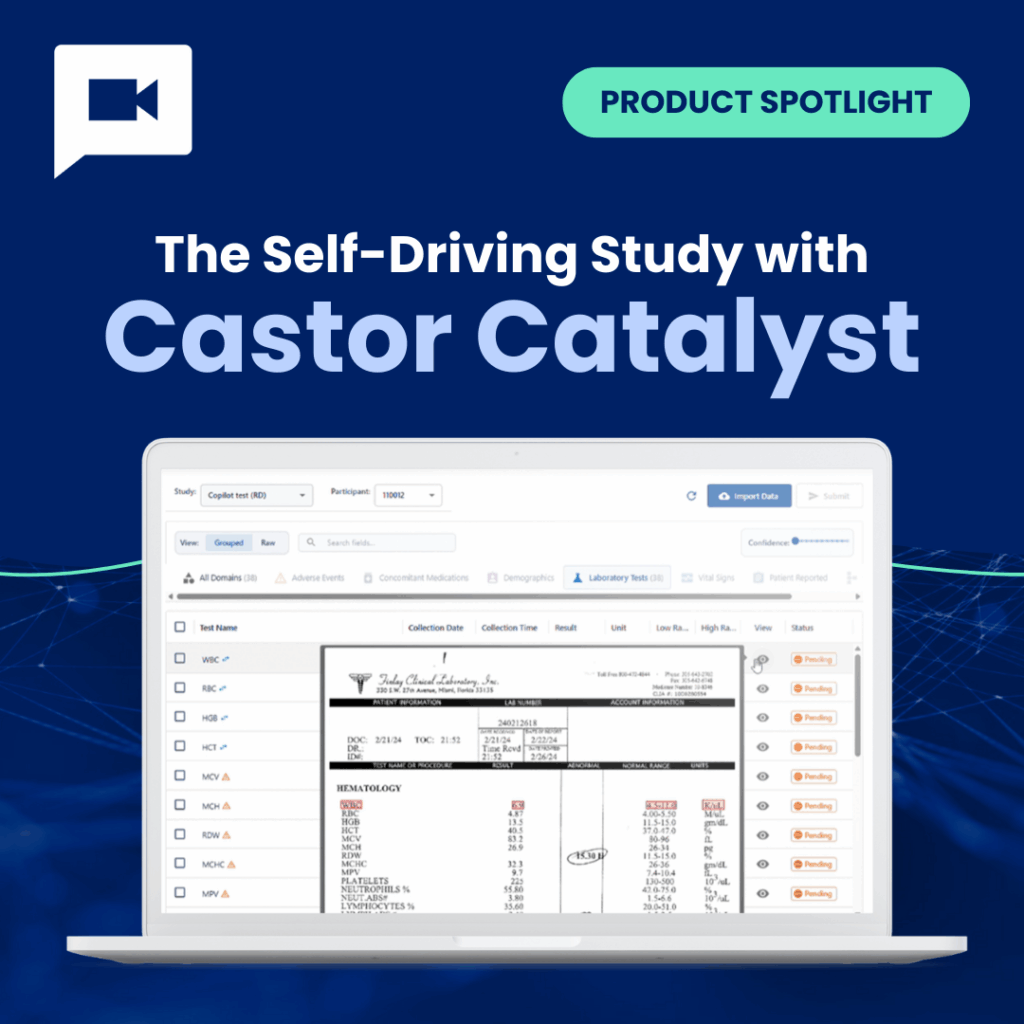

Many organizations start small—using AI for internal tasks that reduce manual effort, such as generating documents. Because these tasks do not involve protected health information, they fall neatly into Tier 2 risk. As comfort and competency grow, teams often explore advanced AI “co-pilots,” which read, interpret, and analyze clinical data or electronic medical records—this is Tier 3 territory, where elevated requirements around data storage and privacy come into play.

Choosing the Right Partner

When evaluating AI solutions for clinical research, life science leaders should prioritize partners that have:

- A Defined Framework: Clear classification rules for how data is handled and validated.

- Deep Regulatory Understanding: Familiarity with EMA, FDA, HIPAA, GDPR, and more.

- Security-First Culture: Quality management systems, robust documentation, and an audit trail.

- Proven Scalability: The ability to adapt AI solutions from small pilot programs to large-scale deployments.

One such approach, spearheaded by companies at the forefront of clinical data management, uses precisely this level of structured, thoughtful adoption—melding compliance with innovation.

Conclusion

AI has the potential to transform life sciences by accelerating discoveries, improving patient outcomes, and reducing operational burdens. Yet, the path to success must be grounded in a rigorous framework that classifies AI use cases by risk level, mandates the right security measures, and respects local privacy laws and regulations. By adopting a tiered model, life science organizations can confidently scale AI—one step at a time—while maintaining the highest standards of data integrity and patient safety.

Interested in learning more about how to leverage AI within a regulated clinical environment? Get in touch to discover how a tiered framework can help you innovate responsibly and shape the future of clinical research.